6 Search Engines and Portals

6.1 Searching the Internet

6.1.1 Finding Something on the Net

Searching for information on the Internet is difficult for many people, so this section will try to explain how search engines work and how to find the item that one is looking for. For your online business it is very important to understand how search engines search and how people try to look for certain things on the Internet.

Some people who use a web browser just enter a keyword where the URL is normally entered. Sometimes this leads to good results. The reasons are explained easily. If you enter a word into a web browser, the browser first adds "http://" to it and tries to find a web page. If that does not work, it adds ".com" at the end and then "www." after the "http://" part. So entering "motorcycles" will lead you eventually to "http://www.motorcycles.com/", which leads to the Honda web site in the US. If you search for Harley Davidson motorcycles then you could enter "Harley-Davidson" and the browser would expand the name to "http://www.harley-davidson.com/", which leads you to the web site of the Harley Davidson manufacturer. Some older browsers do not support the expansion of keywords, so people need to add these attributes manually, but adding "http://www." and ".com" to the keyword is not that difficult. That was the reason why in the early years so many people registered domain names without using them, they had recognized the value it will have in the future.

The fact that web browsers try to guess what you are doing is used by a new service called HTTP2. They seem to have invented a new protocol for transferring information between a web server and a web browser, but in reality they do something completely different. You can go to their web site and register a domain name that uses http2// instead of http://. The trick is that they omit the colon. So if you have a site registered at http2, the "URL" may look like this: http2//ebusiness. The idea of http2 is exploiting a feature of most browsers, that if they do not find an address http://http2//ebusiness, it expands it to http://www.http2.com//ebusiness, which is then redirected to the site you have registered at HTTP2.

If you are looking for a specific item, just type in the name and see if there is a web site with the corresponding information. If there is not such a web site, then try out brand names associated with your search. If you are living in Germany and require Information on ties ("Krawatte" is a tie in German), you could try http://www.krawatte.de/ and you will go to an online shop that sells ties and gives information on how to use ties properly. If you are interested in getting more information on Philips products in the Netherlands you could try http://www.philips.nl/ and will land on the web site of Philips consumer products, Ferrari fans in Italy go to http://www.ferrari.it/ in order to buy merchandise or become a member in the Ferrari fan or owners club. Depending on the country you live in you will have other ideas how to find your information. Some companies, especially smaller-to-medium enterprises, prefer to use the top level domain (TLD) of the country of origin. As a rule of thumb: American companies use mainly .com, all others may use it, but not necessarily. If you search for a company in France, then use ".fr" as the suffix.

Most search engines on the web have a basic search form and a more complex search functionality that allows more in-depth search. Most search engines allow Boolean operations, by combining terms using "AND", "OR", or "NOT". If you want all words to be present in a document you are looking for, you can link the keywords with the "AND" command. If you use the "OR", any of the keywords need to be present in the document you are looking for. By using "NOT", you are able to exclude words, which you do not want to appear in the search results. If you do not give any Boolean expression, most search engines will default to "OR".

Some search engines allow the use of "+" and "-", which basically allows you to perform the same actions as the Boolean expressions. A "+" preceding a term means that it must be present in the search result. A "-" means that you do not want it to be present.

In addition to these standard search features, it is possible to truncate words and use an asterisk ("*") to complete the word and find multiple forms of a given word. Using quotes around several words it is possible to specify that words must appear next to each other in retrieved items. These advanced search tips vary from search engine to search engine. Before using any search engine, it is helpful to read the FAQ of the search engine you are going to use. The search results get much more exact and the amount of time required for searching is reduced significantly.

6.1.2 Different Types of Search Engines

If you do not find the required information using the tips from the previous section, then you need to consult an online service to find the piece of information you were looking for. Three different types of services have been established over the years: Crawler, directories and meta search engines.

The first crawler was created back in 1993. It was called the World Wide Worm. It crawled from one site to the next and indexed all pages by saving the content of the web pages into a huge database. Crawlers or spiders visit a web page, read it, and then follow links to other pages within the site and even follow links to other sites. Web crawlers return to each site on a regular basis, such as every month or two, to look for changes. Everything a crawler finds goes into a database, which people are able to query.

The advantage of the web crawlers is that they have an extensive database with almost the complete internet indexed in it. The disadvantage is that you get thousands of web pages as a response for almost any request.

Web directories work a little different. First of all they contain a structured tree of information. All information entered into this tree is either entered by the webmaster who wants to announce his new web page or by the directory maintainer who looks at the web pages submitted. The directories where webmasters can submit both the URL (Uniform Resource Locator) and the description normally contain more misleading information.

Getting into most web directories is a combination of luck and quality. Although anyone is able to submit a web page, there is no guarantee that a certain page will be included. Some directories charge for submission, which is basically a rip-off.

Some crawlers maintain an associated directory. If your site is in the directory, the ranking of your web site normally will be higher, as it is already pre-classified. Many directories work with crawlers together, in order to deliver results, when nothing can be found in the directory.

Meta search engines do not have a database with URLs and descriptions. Instead they have a database of search engines. If you enter your keywords into a meta search engine it will send out requests to all the directories and crawlers it has stored in its database. The meta search engines with a more sophisticated application in the background are able to detect double URLs which come back from the various search engines and present only a single URL to the customer.

6.1.3 Net Robots

In the early stages of the Internet web surfing had been a nice hobby by individuals who could choose from a handful of useful web addresses. But with the growth of the Internet it has become more difficult to find the right information through simple browsing. Programs that behave like web browsers are far more efficient by browsing through the information and storing the content at the same time in a form that makes it easy to retrieve afterwards. These programs are called crawlers, robots or spiders.

A crawler retrieves a document and then retrieves recursively all documents linked to that particular document. While traversing the document, the crawler indexes the information according to predefined criteria. The information goes into searchable databases. Internet users are then able to query these databases to retrieve certain information. The robots crawl the Internet 24 hours a day and try to index as much information as possible.

In order to keep the databases up-to-date, the crawlers revisit links in order to verify if they are still up and running or have been removed. Dead links happen, when users move information or give up their online presence. In this case the information in the database needs to be removed.

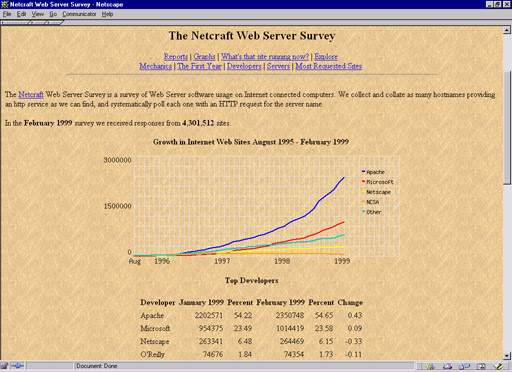

Some specialized crawlers wander around and collect information for statistical analysis, such as the NetCraft (see Figure 1) robot that collects information on the web servers used on the Internet.

Figure 1: Result of the NetCraft Robot

Other robots perform site mirroring activities. Software archives, for example, are mirrored on a daily basis, to reduce the load on a single server and spread it out to many servers, which are on the Internet. Another reason is that it is easier to copy the new information once and then make it available to customers in a special country, instead of letting them download the information from a far more distant server. Tucows uses this technology to mirror shareware and freeware programs throughout the world, in order to guarantee fast download times for every user.

The Robots Exclusion Standard

The Robots Exclusion Standard defines how robots should behave on the web and how you can prevent robots from visiting your site. Although these rules cannot be enforced, most Internet robots do adhere to this standard. If you want robots to ignore some of the documents on your web server (or even all documents on the web server), you need to create a file called robots.txt, which needs to be placed in the root directory of the web server. You can go to any web server and try to see if there is a robots.txt available (just try http://www.gallery-net.com/robots.txt, for example).

A sample robots.txt could look like this:

User-agent: *

Disallow: /cgi-bin/

This simple file disallows access to the /cgi-bin/ directory on the web server for all (marked by the asterisk) robots scanning the site. By protecting the /cgi-bin/ directory you make sure that no documents from that directory are read by robots and prevents these documents from appearing in search engine results.

Crawlers perform a very useful task, but they consume a large part of the bandwidth on the network, which can create frustration to the end user. Crawlers with programming errors can also create unwanted denial of service attacks\index{Denial of Service} on certain web servers, as they are trying to retrieve information at a speed rate that is far too high for that server. But the major problem is the lack of intelligence. A crawler can't really decide which information is relevant and which isn't. Therefore combining web crawler results with results from online directories will give the end customers the best search results.

There are also some problems with robot technology on the Internet. Certain implementations of robots have willingly or unwillingly overloaded networks and servers in the past. Some robots had bugs and started to flood servers with requests, other robots have been tuned intentionally to behave like this. Human interaction can also be a problem, as a human may misconfigure a robot or not understand what impact the configuration will have for contacted servers.

Although there are several problems, you can protect your site by implementing the Robot Exclusion standard on your web page (see Table 1). Most robots are managed well and cause no problems. They offer a valuable service to the Internet community.

6.1.4 Using a Search Engine

The usage of all three search engines is the same and actually very simple. You are normally provided with a text field, where you enter your keywords. The important things are the keywords. Get them right and you will get the answer right. It is best to start with general keywords and then add details.

If you are, for example, looking for camp sites in Italy, try the following: Use the following keywords "Camping Italy", then if this does not produce a useful response add some more details like the region "Camping Italy Tuscany Pisa Beach". The more keywords you add the fewer results you will get (in some cases you won't get any results, so just retry with some other keywords or less keywords). Also try keywords in different languages (e.g.~"Toskana", "Toscana" instead of "Tuscany" or "Italien", "Italia" instead of "Italy"). This is often very useful, but has the disadvantage that you need to speak that particular language to understand the web page.

Which search engines suits your needs best, depends on your requirements. It also depends on the quality of the material you want to gather. If you need a large number of web pages with many different aspects of the desired topic, then go for the crawlers. You can collect the information and use data mining procedures to evaluate the content. If you prefer only some goodpages then go for the directories and if you need a mixture of both then go for the meta searches. Prefer local search machines to global ones if you are searching for country or language specific information.

Finding the web pages of relatives and friends is easy. Just use any of the above described search engines and enter the first and last name as keywords. Provide additional information like the names of the kids, the street address or anything else you connect to the person. If a person has got a homepage you normally will find the e-mail address on that page. If the person does not have a homepage you can look up e-mail addresses on e-mail search engines.

In order to find the telephone number, you may find it on the homepage. If the person has an e-mail address, just send an e-mail and ask. Otherwise have a look into the telephone directories on the Internet.

Finding files is easy. If you know the producer or distributor then go to the appropriate homepage. Patches for PhotoShop can be found at Adobe's web page. New levels of the game Worms can be downloaded from the web site of the producer Team 17. If you are looking for shareware, freeware and public domain programs than go to one of the program search engines.

6.1.5 Adding Information to Search Engines

Web sites need to be announced in order to appear in the search engine results. Therefore the webmaster has to go to the search engine and submit the URL. Depending on the type of search engine, either a human being or a computer will look at your web pages and will decide if it will add it to the database. It takes a few seconds for a crawler to look at all your pages and store the relevant information into the database. A directory will need up to a month to check out your web pages. Submitting the URL to every single search engine will take up too much time, so some people started developing submission tools. Most of them want a lot of money for submission, but some are free, such as the Broadcaster service in the UK.

Chapter 5 contains more information on submitting URLs to search engines. In this subsection we want to concentrate on the way search engines classify a web page. Most search engines first look at the number of appearances of a certain keyword on a given web page. The next thing they look for is if the keyword appears in the domain name (such as www.keyword.com) or in the URL (such as http://www.blabla.com/keyword/bla.html). Then the search engine checks the title of web page and looks to see if it can spot the keyword there. The important thing is the meta-data that you can add to every web page (see next subsection). If the search engine is able to find a particular keyword in every field mentioned here, the page will be classified as very useful to the customer.

Professor Attardi at the University of Pisa developed a methodology for search engines in order to weigh the relevance of web pages. The first search engine to implement this methodology was Arianna in Italy. In addition to the above-mentioned requirements, it counts the number of links pointing to a given web page. The more people link to a certain web page, the more important and relevant it is. This helps presenting the most relevant pages first.

Having an affiliate network that points back to your company therefore will result in a much higher relevance for your company compared to all your affiliates, as they do not link between each other.

6.1.6 Adding Value through Meta-Data

By adding meta-data to every single HTML document on your web site, you enhance the probability that a search engine shows your page for a certain request. The meta-data is stored in the so-called "meta-tags". Meta-tags contain information which is not rendered visible by the browser, but contains additional information on the document, such as information on the content, the author, the software that was used to create the document or the keywords that are relevant on that particular page. The <meta> tag is used within the <head> tag to embed document meta-information not defined by other HTML elements. The META element is used to identify properties of a document (e.g., author, expiration date, a list of key words, etc.) and assign values to those properties. At least two meta-tags should be present on all pages: description and keywords.

Using Meta-Tags

The following example shows how meta-tags could be used on a web page about FlashPix. Four meta-tags are used:

- author — The author of the content.

- generator — The program used to create the web page.

- description — A short description of the content.

- keywords — Keywords related to the content.

<META HTTP-EQUIV="Content-Type" CONTENT="text/html; charset=iso-8859-1">

<META NAME="Author" CONTENT="Daniel Amor">

<META NAME="Generator" CONTENT="BBEdit 4.5">

<META NAME="Description" CONTENT="This page explains the usage of Flashpix. There is a demo and links to other Flashpix pages.">

<META NAME="Keywords" CONTENT="FlashPix, Java, JavaScript, Demo, Usage">

<TITLE>Flashpix</TITLE>

</HEAD>

The description tag contains one or more sentences describing shortly the content of the web page. The summary is shown as the search result, instead of the first few sentences which are on the page, resulting in a much faster understanding of what the page is about. The keywords tag helps search engines to decide on the relevance of a certain web page for a given keyword. If you add the right keywords to the search, the search engines will show your page much earlier in the listing. Add keywords in all languages that are relevant to you. Other meta-information that is useful to know is the author of the web page. Add the author tag and enter the name and e-mail of the responsible person for a certain web page and people will know who to ask if they have questions about the web page.

Infoseek, for example, is able to search for meta-tags. It is possible to look for all web pages which have been written by a certain person. By entering " author:amor" you will get a list of web pages that have been created by people with the name of "amor", such as myself. Programmers of web utilities are able to see how many web pages have been created with their tool. Bare Bones Software, the maker of BBEdit, may count the web pages by using " generator:bbedit", for example.

Meta-Tags should also be multi-lingual. Add keywords in all languages you or your friends, family or employees know. Look up keywords in dictionaries in order to extend the reach of your information. Especially if your information is of value to many customers around the world as multi-lingual and cross-lingual search engines are not the standard yet.

6.1.7 Specialized Searches

In addition to general web searches, many specialized search engines have established themselves. These search engines focus on a special file type and produce far better results than general search engines ever could. Before the invention of the world wide web, FTP sites had been very popular. These sites contained lots of files that could be downloaded. At that time a program called Archie was very popular which allowed the search for file names. Today nobody knows Archie anymore, but file search engines are still very popular. Sites such as shareware.com, filez.com and Aminet allow the search for computer programs and data (images, sounds, fonts, etc.). Every file that is uploaded to one of these sites is accompanied by a short summary of the function and some keywords, which make the search simple.

One of the most popular file formats is MP3, which contains music. The music is stored in a highly compressed format and has almost CD quality. Although distributing copyrighted music over the Internet is illegal, many sites offer complete sets of CDs that can be downloaded. No matter what you are looking for, on the Internet it is possible to find any CD. It is possible to download the music and put them onto your hard disk or burn them onto a CD. The music industry opposes the creation of these search engines, but can't do much about them. One of the most popular MP3 search engines can be found at Lycos. A meta-search engine for MP3 is also available at 123mp3.com, which allows not only to search over several dedicated MP3 search engines, but also to search for videos, lyrics and web sites.

Newsgroups are very popular, but without a search engine it is not possible to find a certain thread on a given subject. Every day tens of thousands of messages are posted on the newsgroups, therefore high quality full text search engines are needed to retrieve the relevant data. DejaNews is one example, that not only allows you to search for online postings, but also enables the users to post new messages.

6.1.8 People Search Engines

Private investigators are able to use the Internet to research personal information on people. Finding postal addresses, telephone numbers and e-mail addresses has become very easy through the use of specialized search engines. Generic search engines will find this information only, if it is stored on web pages, but most databases use dynamic web page creation to present the data, which cannot be crawled by web spiders. Four11 allows the entry of a first and last name to retrieve the e-mail address of a person, TeleAuskunft allows customers to search for telephone numbers and postal addresses in Germany. So far no telephone book has been established on the Internet that has phone numbers from all countries around the world. A meta-search engine on phone books will be able to resolve this problem rather quickly, but nobody has thought about that yet.

Although none of the databases at present compromise the privacy of a person, the sum of the results can present a very detailed picture of a person. In Europe only telephone numbers are available on the Internet due to very restrictive privacy legislation. The United States has a very lax privacy legislation which allows very private investigations.

Infospace, for example, allows the retrieval of phone numbers, postal addresses, financial data, such as credit card limits, if a person has been in court, driving license information and even the names of neighbors. Although the service is not free of charge, it enables anyone with a credit card to become a private investigator without even leaving the house. By using chat groups it is possible to talk to friends, neighbors or even the target person directly using masquerading techniques. Tracking people around the net has also become possible through new technologies.

This information can be used to track a person. Using the social security number it is even possible to go to other databases that are restricted to the person with the number. Just enter the number and you will be presented with some personal data.

The web site of DigDirt goes even further. It researches even more private details, such as visits to the doctors' and credit card invoices, which make the person even more transparent. How often a person has been to the doctors' tells something about the state of health and the credit card invoice shows quite clearly, where the person has been over the last few months, for example, and how much money has been spent.

Additional information can be found in the local newspaper. Most newspapers offer online search functionality where you can access the complete database of the newspaper, where you may find more information on a certain person.

This information could be used by an employer to evaluate if a candidate is suitable for a certain job. An online retailer could do some background checking on a customer before sending out the ordered goods. Many privacy organizations are worried by this development. More information on privacy issues on the Internet and how to resolve them can be found in Chapter 10.

6.1.9 Tracking Search Result Positions

As every search engine returns a whole set of web pages for every query it is important to appear within the first ten results. Therefore it is important to verify from time to time the position of your web site in every important search engine. In order to move up in the ranking of the search engine, check out the web pages which are ranked higher than your site. Download the web site and look through the HTML code, the content and write down the URL. All these pieces of information influence the position of a web site, as we have already learned before.

If you are interested in increasing the position on various search engines, then you need to keep track of what you have submitted. Every time you change your web site you should re-submit the URL to every search engine. Web crawlers will automatically come back to your site at regular intervals, but by re-submitting the URL, it will be updated in that particular instant.

This, of course, can also be done automatically. Several Internet applications allow the automatic tracking of positions in search engines. One is called WebPosition. This program allows you to find the exact position of each of your pages in every major search engine. In addition to this it gives automatic advice on how to improve the current position by comparing your page with the rules of the search engine for an exact match.

Reports are generated automatically and search engine positions can be compared between the reports. WebPosition has a special function built in, which is called "Mission Creation". With this feature it is possible to create web pages that are ranked very high on a given search engine for certain keywords. If you want to move a certain web page into the top ten of Altavista when people enter a certain keyword, then the program will help you through a simple process of changing the current web page to become the top addresses for that keyword.

In combination with a submission tool, you are able to increase traffic on your web page and direct more relevant people to your web site. One such tool is Submit-It, which enables online and offline submission. All the relevant information is entered into a form, which is then sent out to a large bunch of search engines. The program does nothing else than you could do by manual submission, but the automatic submission is much faster.

6.2 The Future of Searching

6.2.1 Issues with Search Engines

Searching by keyword is the most common method of using a search engine, but the problem with keywords are the relatively imprecise results and the return of a lot of irrelevant information. Keywords may have more than one meaning and search results may be found only by using a synonym of the keyword. The method of browsing on the other hand takes too much to find the relevant information. Directories like Yahoo try to circumvent the problem, but the manual process of classifying material on the web takes up too much time, resulting in very few search results, as not everything on the web can be classified. Therefore new paradigms of searching are needed as well as new software that is able to categorize web sites automatically.

The majority of search engines come from the United States and have specialized in English resources and information reflecting the American culture. People who do not speak English or who are non-native speakers have therefore many disadvantages on the web.

The centralized approach to information retrieval has extreme difficulty in coping with the multi-lingual and multi-cultural nature of the Information Society. The Internet has become a success throughout the world, but the American search engines operate with a US-centric company structure and tend to concentrate upon the English language. Although many search engines have subsidiaries in many other countries, like Japan or Italy, the way the information is presented is the American way and may not reflect the logic of the people who are using it.

National search engines in Russia or France, for example, have to deal with far smaller sets of information and specialize in the cultural and linguistic environments which they know best. Their disadvantage is that the queries are in Russian or French and the search results contain only a small subset of possible results on the web, as they are restricted to the language. This strongly reduces the possibility of using the Web as a source for the world wide diffusion of information.

Larger search engines, such as Altavista are able to perform multi-lingual searches, which presents search results in multiple languages. This is good, if the searcher knows all the languages, but if, for example, an Indian finds a Japanese web site on the search topic, this may not be helpful.

Text documents that are in special formats (such as Postscript or Star Office Documents) are unreachable for many search engines, as the textual information is embedded into the binary structure of the particular file format. The same applies to scanned documents, Java applets and video/audio clips. The content of these file formats is hidden from search engines today. Only if the description of the file format is known and included into the search engine is it possible to add the content for certain document types. This is relatively easy; just a matter of work. Infoseek, for example, is able to index the content of Word documents. More difficult is the inclusion of content that is hidden in applications, as there is no way to tell where the information may be hidden.

The research and development in information and data retrieval is aimed at improving the effectiveness and efficiency of retrieval. Individual and parallel development for database management systems has left this sector without a centralized vision and co-ordination between the different types of search engines. Search engines on the Internet are very specific and not able to cope with multiple database formats and file types. In order to make searches complete a search engine needs to search over text, documents, images, sounds and all other media formats. So the database integration will be the single most important objective for the future of intelligent search engines.

In order to receive better search results, it is not only necessary to improve the search engine technology, but also the user interfaces, depending on the type of user (such as casual, researcher, users with special requirements).

Neural networks will be used more commonly in the future to organize large, unstructured collections of information. Autonomy is a search engine that uses the model of the adaptive probabilistic concept to understand large documents. The system is able to learn from each piece of information it has discovered. By putting together a set of not so relevant resources, the set should get the large picture by reading through all the documents. Therefore it is necessary to rank information by importance.

This allows zones in a portal to be created automatically (for an explanation of zone see later in this chapter) or with a minimum of manual intervention, instead of manually creating the zones in a way Yahoo, for example, does it. In order to achieve this goal, such systems need to employ technologies for concept clustering and concept profiling.

6.2.2 EuroSearch

One project to overcome the above mentioned limitations of search engines in the EuroSearch project. EuroSearch is a federation of national search engines which gives much better results and is more suited to the challenges of the multi-lingual and multi-cultural global Internet. The founding members are national search engines from Italy, Spain and Switzerland. The multi-lingual approach allows a query to be entered in the preferred language of the researcher and the search engine takes care of the search on the search engines in the other languages.

Every national site that is part of the federation remains in the country of origin and is maintained by a native-speaker who will ensure that the search works in their own language. At the same time, the EuroSearch framework tries to remain open to other countries and services who would like to become part of the initiative.

The framework allows provision, access and retrieval of documents that are not only in English, but also in a variety of other European languages. This makes it easier to find information provided in other languages than English that may contain the information the searcher was looking for. It enables people who do not speak English to retrieve information and information providers to present their information in their mother tongue, as they can express themselves more clearly in that language.

Other than with search engines that retrieve information only in one language, the cross-language search results need to be presented in a form that the searcher understands. The description of the documents should be presented in the language of the query. Cross-language retrieval is not the same as multi-lingual. The multi-lingual systems support many different languages, but cross-language retrieval systems provide the increased functionality of retrieving relevant information written in a different language than the user's query. The query is translated to the target language and then the search engine is queried. In order to broaden the search a thesaurus for every language needs to be incorporated into every search engine and automatic classification procedures need to be implemented.

The EuroSearch project wants to develop techniques and resources to implement a cross language search engine and to improve retrieval and classification technologies. The ultimate goal is to create a federation of national search engines that work together in order to deliver better search results. A prototype is currently being built that provides an interface to formulate queries and present understandable results in the users' preferred languages. This requires the system to translate not only the queries into a meta-language, but also translate the results and the resulting web pages into the query language to make it accessible to the user. This approach makes the whole web more accessible to non-English speakers.

EuroSearch will simplify the access to information on the Internet in and across different languages. A simplified and multi-lingual access to the wide variety of information on the Web will improve cultural exchanges and knowledge integration between European countries. This means a significant improvement in the quality of the available information for every user. This will not only have an impact in Europe, but in all non-English countries on the acceptance of the Internet.

6.2.3 Natural Language Searches

The idea of natural language access to a database is not new, but still hasn't been achieved. Most search engines are not able to handle questions such as "Where can I get light bulbs?" or "How many legs does a horse have?" The answer to the questions can be found for certain on the web, but the search engines are not able to understand the questions. If you have a page that has a question and an answer on it, then you may succeed, but this is a rare case. Instead of receiving the answer "At shop XY in Z" or "four", you would receive a long list of search results, as the search engines split up the question into keywords. Every word in the question is then searched for.

The difference between conventional and natural language search engines is the way pages get indexed. Unlike a conventional database, the symbolic approach to natural language processing involves treating words as nodes in a semantic network. The emphasis is then placed on the meaning of the words instead of the single words.

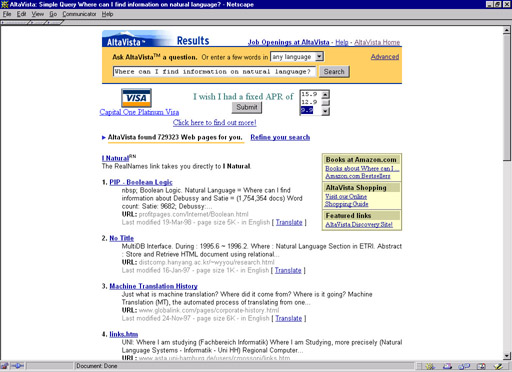

Figure 2: Natural Language Search on Altavista

A natural language processor (NLP) that is required for an Internet search engine needs a large and concise dictionary. Words are represented then by the way they are used (this approach is called case-based reasoning). Each occurrence of a word is treated in an individual way, resulting in extremely large databases, as every word can have up to a few thousand individual meanings. The strength of case-based reasoning is in it's flexibility. Since databases are based on semantics, imprecise querying may still retrieve information which is close to the answer but does not exactly match the question.

The second approach to natural language search engines is to add a thesaurus to the database. A query on "plant" will also look up "factory" and "flower" as both of them are related to "plant", even though they have totally different meanings. This entails a significant improvement in precision and recall rates over current search methods. Improving the syntax analysis is also very useful. By analyzing the syntax of a given query, it is possible to compare it with stored patterns in the database. Altavista supports natural language queries, and the results get better every day. Figure 2 shows the results for the question "Where can I find information on natural language?" Only simple questions are supported, but it is already the next step to complex natural language search engines.

6.2.4 Image Search Engines

On the Internet are many images, but unfortunately it is not possible to search for them in a similar way, as it is possible to search for web pages or music files. Other than with web pages, where I can use text to describe what I am searching for, and music, where I can name the artist or the title (I would have trouble if searching for a sequence of notes), images on the web are in most cases not of artistic value, otherwise it would be possible to search for the artist or the title.

In many cases people search for a boat, a house, a dog to illustrate a text, for example. Image search engines on the Internet today are using the information that accompanies a picture, such as the file name (e.g. "href=`cat.jpg'"), the alternative text (e.g. "alt=`this is a picture of a cat'") or the text that is next to the image ("the following image displays a cat"). As long as your search is very generic, the existing image search engines work very well. More and more people add the HTML alt-tag to provide additional information on the image, which helps finding the right information. But unfortunately the provision of alternative text (i.e., using the ALT tag) for textual WWW images is in most cases wrong or incomplete.

The problem arises, as soon as you search for more specific images. "A red cat with a little ball" could be a very common request. Although it is highly unlikely that the filename will contain all this information, the alt-text may contain it and the accompanying text as well, but don't be too sure. Another issue may be that the cat with the red ball is on a picture among many other things. It could well be that the web page is talking about balls and will not the mention the cat, or talk about a table, where the cat and the ball are placed on top. In these cases image search engines that rely on textual information will fail miserably. Many images contain text that could help to index the image correctly, the problem is extracting the textual information from an image. The logo of Tucows, for example, contains the alt-tag "Moooo...". This information is not helping anyone. Extracting the text from the logo would help indexing the image correctly.

Apostolos Antonacopoulos of the University of Liverpool in the UK started a project in 1998 to extract textual information from images on the web. Although humans are able to extract the information from images very easily, there is no way to automate the analysis on such text at present.

In order to index and validate the information in an image, the characters embedded in the image need to be extracted and then they need to be recognized. The task of identifying the text appears to be similar to the traditional techniques in optical character recognition (OCR). But there are several differences. The text in images has the advantage over scanned text, in such a way that it contains no distortions from digitization. But other than scanned documents, the backgrounds on images tend to be complex (compared with a white paper) and the characters are very small, in order to keep the resolution low. A web image is presented in 72 dpi, while scanned documents are at a resolution of 300 dpi. If using a lossy compression algorithm, such as in the JPEG format, compression and quantization artifacts appear, making it more difficult to extract a character and then recognize it.

Previous efforts to analyze text in images restricted themselves to single color text and ignored very small characters. As they were globally analyzing the image file, the performance was bad and it took a long time to complete. As only very few characters use a single color and very many texts are small this approach was not resolving the problem. Mr Antonacopoulos' approach is different. The algorithms used allow the identification of text with gradient color or textures on complex background textures. This allows the retrieval of textual information from images.

The results are achieved through several steps. First of all, the number of colors needs to be reduced. Although GIF images use only 256 colors, JPEGs use up to sixteen million colors. By reducing the number of colors, it is easier to detect text within the image. By dropping bits, the number of colors is reduced to 512 colors. After having reduced the number of colors, a color histogram is created, that shows which color is used how often in the image. By identifying the differently colored parts of the image, the colors present in the image have to be grouped in clusters according to their similarity.

After the algorithm has determined the main colors, the regions which share the same color are extracted and a special technique is used to identify the connected components. This allows the contours of particular image regions to be identified that form closed shapes. By examining the relationships between regions potential character components are extracted. The proposed system is working very well. So good actually that is has found its way into a commercial software package.

The next step will be to implement a search engine that recognizes objects in an image, but this is highly difficult and requires a lot of computing power, as a cat may be painted, photographed or drawn. The cat can run, sit, jump and the perspective can be varied. Although a human is able to detect a cat very easily, expressing the looks of a cat mathematically is very difficult, so don't expect anything on the Internet soon. But then again the first rule on the Internet is still valid, expect the unexpected.

6.3 Intelligent Network Agents

6.3.1 Little Helpers on the Web

Intelligent agents are widely used in computer science and are part of the research in the area of artificial intelligence. Areas of agent research include user-interface agents, such as personal assistants and information filters, which perform networking tasks for a user. Another area of agent research are multi-agent systems, such as agent communication languages, systems for co-ordination/co-operation strategies. Autonomous agents are programs that travel between sites and decide themselves when to move and what to do. At the moment this type of agent is not very common as they require a special type of server, where the agent can navigate. This section focuses on network agents, which are also known as "knowbots", little robots that gather information on a network.

As we've seen in the last section search engines can help us find information, but the problem is to remember where this information was, when we looked at it last and to know if something has changed since we last visited the site. It sounds easy to save every interesting page in the bookmarks of the browser, but soon you will have hundreds of bookmarks on your computer whereby many links will be dead.

The major disadvantage of search engines is that they require human interaction, which leads to incomplete sets of information. Even with good search strategies it may take some time to locate the information desired, and time is money. In many cases people are not able to locate content although it is available on the Internet.

In order to reduce the amount of time needed, intelligent agents have been developed that are able to search on the Internet without user interaction (except for the entry of the keywords). Let's look at an example. You look for information on Shakespeare's literature. No matter how hard you search on the Internet, you always miss some information, as there are far too many sites, newsgroups, portals and chats to check out.

The idea of intelligent agents is to search for a set of given keywords (in our example: Shakespeare and literature) not only once, but repeatedly every hour, day or month in order to be always informed of the latest developments about your topic. Simply putting together a query for a given set of databases would not resolve the problem, as the set of databases changes on the Internet all the time. New sites appear and existing sites disappear. The agent will keep you always up-to-date.

Intelligent agents should not only collect data, they should also be able to classify the data. This can be achieved by counting the times a certain word or synonyms appear or by the structure of the document. The difficulty is to characterize the importance and relevance of keywords that were found in a certain document.

6.3.1 E-Mail Agents

One of the first applications for intelligent agents was to sort out unwanted e-mail from the inbox. Although it is possible to delete automatically mail from certain e-mail addresses, this does not help anymore, as spammers use fake addresses that change every time they send out something. Therefore it is necessary to develop agents that are able to detect spam automatically because they contain certain keywords or a certain structure.

Another application in the area of e-mail for agent software is to prioritize e-mail by importance. This type of agent moves the most important mail to the top of your mail folder, so that you can read it first. The priority can be defined by sender, content or structure.

In the first case it is rather simple to build out-of-the box products to prevent spam, as spam is seen by most people in a similar way. In the second case it is necessary to customize the agent in order to support personal needs. This is much harder as it requires the person who uses the agent to think about their priorities. Although this exercise is really helpful and will help to save a lot of time, especially if you receive a lot of e-mail every day, many people do not take the time to think about their priorities.

6.3.2 News Agents

Over the past few years more and more news agents have established themselves. These news-agents are able to create a sort of customized online newspaper, which displays only news that is interesting to the customer. The agents therefore need to visit all news sites and decide which of the offered news may be interesting to the particular customer. It then collects the information and passes it on via e-mail or web front end.

In Germany two companies are competing in the business of news agents. Paperball and Paperboy. The competition between these services is getting them to add more online newspaper resources to their database to create an even more detailed personalized newspaper, which can be viewed either on a web page or where the headlines with some additional relevant information are sent out to the customer automatically via e-mail.

In the past there have been some disputes between the newspapers and the digital news agents, how the information should be presented on the news agents' web sites. In the past the digital news agents collected the raw information and included it into their own layout, adding their own advertising. This is no longer possible; the news agents can only produce the headlines and some lines of text and then they need to link to the original newspaper site, in order to stay away from legal trouble.

There are still many privately owned sites that offer collections of news links which strip away the advertising. Depending on the popularity of these sites, online news services sue the owners and try to stop these sites. But for every site that is shut down ten new ones appear, making it difficult for Internet news providers to stop it. It is part of the Internet culture to make information available for free, even without advertising, but there is no technical way to stop it and only limited legal power. See Chapter 4 on the legal aspects.

6.3.3 Personal Shopping Agents

The most interesting agent is the personal shopping agent that scans the Internet for the lowest price for a given product. The shopping agent consists of a database with merchants on the web and how to access their databases. Once customers decide on a product they are able to key in the product name, part number, ISBN number or a keyword that relates to the product and the shopping agent starts checking all the merchants for the product. If the search word is too generic, the customers will be presented with a selection of possible products. If the customers then decide for a certain product the shopping agent will query all databases for the prices and availability. The prices displayed normally do not only contain the price for the product, but also for shipping, so the total price is displayed as well as the time for delivery.

Acses, developed by some German students, has become highly successful. It has specialized in searching for books on the web. By entering the title, the author or the ISBN number, the shopping agent looks out for the book and presents the results in a list. The books are sorted by price, including postage and packaging. Besides the price, the time of delivery is presented. I use the system very often and have already found some books to be cheaper in Australia than in Germany, where I live. Sending the books from Australia may take some weeks, but if the price difference is large enough then it is a viable option.

Jango, a shopping agent developed by the American company Netbot allows the price comparison of computer products. As with Acses the price list is not stored at the site of the agent but is retrieved in the moment of the customer's interest, therefore providing the actual price. The difference between Jango and Acses is the way payment is handled. Acses sends the customers to the bookshop where they can pay using the bookshop's payment system. Jango on the other hand offers the possibility of paying at their site without direct access to the Internet shop that provides the product.

As more and more shopping agents become available, a special portal for shopping agents has been set up. SmartBots.com is a directory offering information on shopping agents from all over the world. See Table 3 for an overview on some of the most used shopping agents, which can also be found on SmartBots.com.

Shopping agents work for mass products, such as software, compact discs and books, as they require no customization to the individual customer. Other products, such as cars or complete computer systems won't be targeted initially by these agents, as the offerings differ too much on every web site, just as do the requirements by the customers. Cross-promotion and service are other points that cannot be dealt with by the shopping agents as they cannot be expressed simply by value.

In order to allow these advanced features, the shopping agents themselves need to become highly complex configuration systems. Then such a shopping agent could be implemented that is able to find a complete computer system at the lowest price possible by comparing all the components individually and looking for cross-promotions (e.g. a hard disk becomes cheaper if bought with a certain type of memory). This will require a large investment on the shopping agent site, as it needs to know which components work together and which of them exclude each other. This information is different for every product. The shopping agents eventually become true brokers.

Overview on Shopping Agents

The following list contains a selection of shopping agents that were available at the time of writing. For an complete and updated list, please go to http://www.smartbots.com/.

| Name | Description |

| Acses | The ultimate shopping agent for online bookstores. |

| Auction Watchers | This specialized agent allows you to scan prices on Internet auctions for computer equipment. |

| Bargain Finder | Specialized in finding compact discs. Uses nine online shops for comparing products. |

| E-Smarts | Complex Internet Guide with shopping agents for bargain shopping on the Web. |

| Jango | Offers price comparison of computer products. |

| MySimon | MySimon can be taught to shop in new online shops with guidance of the customer. |

| Shopper.com | Compares 1,000,000 prices on 100,000 products, which are available online. |

6.4 Portal Sites, the New All-in-One Mega Web Sites

6.4.1 Growing Together

A portal is a "World Wide Web site that is or proposes to be a major starting site for users when they connect to the Web or that users tend to visit as an anchor site" (as defined by the whatis online dictionary). Portals contain lots of content in the form of news, information, links and many services, such as ordering flowers, books and CDs or free e-mail and web space. As locating desired material on the Internet becomes more difficult, the value of fast, reliable and simple-to-use portals increases.

Portals are actually nothing new to the web. AOL and CompuServe in the United States, T-Online in Germany and Ireland Online, for example, have offered something similar for years. All online services have had a private web site with special services for their customers, like online banking, free e-mail, search engines, chat forums and online shopping malls. The difference between these first generation portals and the current wave of portals is the target audience. With the first generation, only subscribers to AOL could see the AOL portal pages. The second generation which is already online is not restricted to availability for subscribers of a certain online services only, but can be accessed by anyone with a web browser.

The reason online services have opened up their private portal sites is that search engines, like Excite, and directories, like Yahoo, have started to offer services in addition to being a directory or a search engine. These services are accessible via a web browser from anywhere without the need of being a subscriber to a certain online service. Digital's search engine AltaVista, for example, added instant online translation to its search engine as an additional service, whereby any web page can be translated on the fly while downloading them from their respective server. Yahoo offers free e-mail and a news service. You can even download a little program from Yahoo's web site that displays a ticker in your task bar on Windows computers, where you can see the latest developments in politics, finance and sports.

The search engines and directories had to add new services to distinguish themselves from the vast number of similar services. There are more than 100 international search engines and more than 15 — 20 search engines per country, ranging from hundreds for Germany (like web.de) and the UK (such as GOD to Fiji-Online. The result was that they entered the domain of the portal web sites. The search engines were already the most attractive sites on the web, but with the addition of new services they were able to enhance their customer loyalty.

By offering additional services through registration, portals know quite well who their customers are and are able to respond to their wishes fast, which is very important if you live off banner advertisements. One-to-one marketing is the key to customer loyalty.

The third large group that became portals is the group of software distribution sites, like Netscape's NetCenter and Tucows. The former is the largest portal in the world, worth more than $ 4.3 billion (this was the price AOL paid Netscape for NetCenter in November 1998) and the second is one of the largest archives for shareware and public domain software on the web. They were facing the same problems. They needed to distinguish themselves from their competitors. On the Internet there are only few ways to distinguish oneself from the competition; i.e. by price, speed or service. If you do it right, you are cheaper, faster and offer a better service.

The fourth large group of web sites that became portals were the sites offering free services, like e-mail and web space; Global Message Exchange and Xoom, to name one service from each of the two categories. As users tend to come to these sites on a regular basis to check their e-mail or to change their web sites, they have a high potential for becoming a portal.

Jim Sease offers a "meta-portal" on his web site. He links to all sorts of portals where users can get additional information. This may be useful for selecting a portal but once you have found "your" portal, you will most probably stay with it and ignore the others.

Converting traditional shopping malls to digital shopping malls failed in most cases, because they only replicated the environment and not the information. Having twenty shops within one domain is no help to the customer; a link to a page within the domain is just as far away as a link to another country. Portals combine shopping and information and pass the relevant information on. Users enter their requirements once and are guided through the possibilities of the portal. Most portals save user profiles to make them look even smarter. They remember what particular users did last time and are good at guessing what they may want to do this time by presenting to them information and products that they are most likely interested in.

6.4.2 Digital Neighbourhoods

Free web space providers such as GeoCities offer a wide range of neighbourhoods, where customers can find other people with similar interests. Adding services, information and product offerings to these neighbourhoods will create a zone with added value for every participant. The zones create a virtual market space which is highly specialized. The zone on a certain portal can act as an umbrella for many companies of all sizes. These virtual marketplaces also make it easier to sell advertising space to potential advertisers, as the viewers are most likely to be of a certain target group.

If you look at various portals you will find services there which you have already seen in other places. Portals tend to buy existing services and incorporate them into their web sites. This is so-called co-branding. Netscape, for example, uses the Florists Vicinity and Excite uses the e-mail address book from WhoWhere.com. Building up such services would break the financial neck for most portals, so they co-operate with existing online service providers. For users there is no big difference if they click on Altavista's news section (which is provided by ABCNews) or on ABCNews web site. The real advantage is the tight integration of different services. Read a news story on Altavista and search for sites related to that topic with one click or search for a certain keyword on Yahoo and get a list of reference books from them (with kind support from Amazon.com).

Another start-up service provider is ConSors, an online brokering service company. To their direct customers, who come to their web site, they do not offer any support. They want only the "intelligent customers" who are able to help themselves. But at the same time they are offering the brokering service to online banks, who can include the raw service into their product portfolio and offer consulting and other things around this raw service. This new paradigm of providing core services to portals has been taken on-board by Hewlett-Packard's E-Services campaign. An in-depth review of the paradigm can be found in Chapter 15.

6.4.3 Becoming a Portal Player

Becoming a portal player is not difficult. All you need is interesting content, which is unique on the Internet, and a good search engine to start with. With some HTML knowledge you can build a simple portal by adding your favorite links, a search mechanism, links to free e-mail and chat and news feeds, thus creating a homepage of your favourite bookmarks. Your browser bookmark file is a form of private portal. As every page on the web has the same priority, there is no reason why five million people should not access your homepage instead of AOL's. All you need is good marketing and a solution for these five million people.

In order to become a successful portal you need to add services that tightly integrate into your online offering. Integration is the main reason why so many people choose portals over individual sites. Once you have registered with a portal, the information can be reused for all services within the portal. Although some may not like the idea of cross-selling personal information, most people do like the idea of their own personalized portal site. Once you have entered your credit card information for buying a book via a portal, this information can be reused to pay for something else within the portal or offer the same customers other products to complete the last buy. The user does not need to re-enter information. Being a major browser producer will help a lot establishing your portal. Netscape and Microsoft have highly successful portals because their homepages are the default homepage of their browsers.

The Go Network, for example, invites their customers to use a web directory search for web pages. The news headlines and financial data are on the page and it is possible to get a free e-mail account. It is possible to sign in and customize the page to the needs of the individual subscriber. In Figure 3 you can see my customized page on the GO portal site.

Figure 3: The Portal Site of GO Networks

While building up your portal it is helpful to offer free services like web space, free e-mails, chat rooms, newsgroups, and free software for download. This will get the users attention in the first place. They will come to your portal and try it out. In order to keep the customers attracted to your web site, you need up-to-date news feeds, games, online help and some online shopping possibilities. It does not matter if you build up your own service or if you buy it from someone else. Customers won't notice in most cases, but they will notice how easy (or difficult) it is to navigate within your portal and find information on a certain topic. Although portal owners have services directly included in their system, they should never exclude other services from their search engine. The customers should never have the feeling that the search engine only shows the preferred suppliers. If the customers want to get in touch with another company the search engine must be able to provide the users with the required information, otherwise they will go on to the next portal where they feel that their requirements are met in a better way. The services which are offered on the portal site should always be available as a suggestion and not as a must.

Search capabilities must have the top priority for your portal. This is the most frequently used service on the Internet. Information on the site needs to be organized in zones (sometimes called channels or guides) on general topics like Computer, Travel, Arts and Cars.

Each zone combines all offered service for that particular topic. If, for example, you select the Entertainment section on Yahoo, you will not only get the directory entries for that particular topic. You will also get links to related news and current events. You are able to join a virtual community using online chats, clubs or message boards and you get a list of online services that are related to entertainment, like online auctions, a classified section, yellow pages and online shopping (with a direct link to CDNow). It is even possible to see a listing of cinemas with timetables. All these services are offered by clicking on a simple link that says "Entertainment". Two or three years ago you would have found only a long list of web sites where you could find more information on the topic, but no service at all.

6.4.4 Portal Owners and Service Providers

Portals have two major players: The portal owners and the service providers who add functionality and services to a portal. Depending on the structure of your company and your plans for the future, you should become either one or the other, ideally both.

There are two different types of portals on the Internet. The horizontal ones which cover a lot of topics and the smaller ones, the vertical portals with a tightly focused content area geared towards a particular audience. Gallery-Net, for example, offers a directory for artists, art-related sites, online galleries and virtual museums. In addition to this it offers free e-mail and a chat, web space and a shopping area. It appeals mainly to art-lovers and artists, but still offers a wide range of activities from this one site thus being the portal for many people around the world.

Another example is the web site of Gravis in Germany. I visited their site in December 1998. Although they are the biggest resellers of Apple Macintosh products in Germany their web site consists mainly of scanned images of the printed brochure. There is no way to get any additional information on the products or on prices. It is not even possible to buy anything online. There are no online chats, no newsgroups, no FAQs, just a few 100-200 KB images and that's it. They have an extremely high potential of becoming a major Mac portal for Germany, but unfortunately they did not see the potential of the Internet when I contacted them back in December 1998. I asked them about their new G3 accelerator boards. It took me three mails to explain what I was looking for on their web site. Finally they thought that they had understood and sent me the URL of the producer Phase 5. I don't think that I have to comment on this reaction. The people in their customer care center have to learn how the Internet works.

If you and your company have an interest in only one specific topic it is quite easy to build up a portal using your knowledge and your products. If, for example, a company is selling dog food, creating a vertical portal web site would consist of adding a search functionality not only for that particular site, but Internet-wide. It would need to offer online chats, e-mail, web space, newsgroups and all the other things which we have discussed above, tailored to meet the needs of dog owners. Create a portal that would be the kind of service all dog owners would come back for. The vertical portal could be a zone in a horizontal portal, but with more detailed information and services than a general portal like Yahoo\index{Yahoo} or NetCenter could offer. As a dog food company one could ask Excite, for example, to include the services provided by the dog food company for dog owners in their portal or even offer to outsource the dog zone on Excite's portal web site.

As you can see, becoming a small portal is easy and can be a threat to the large ones, if done well. Be careful about offering services that are not related to your company. If these services fail, your company will be held responsible for it. So choose your partners carefully.

6.4.5 Personalizing the Online Experience

To return to personalization or one-to-one marketing (personalization is typically driven by the customer and one-to-one marketing is driven by the business), portals use these techniques to deliver precisely the information the customer needs in the way the customer wants it to be presented. The user profile that consists of information the customers have entered and information they gave while browsing the site is used to organize the portal in the most convenient way for them. The simplest form of personalization is described above. All services that are available are focused on the customer's search topic. The next level would be to provide local weather reports and other local news of interest (e.g.~movie listings). This would require that the users enter their home town or the zip code and the country they live in. Giving only so little information about them allows the portal to become even more personal. The more information the customer is willing to give the better the portal adapts to the needs of the customer.

Most portals have personalized home pages for their customers where they are greeted with their names and get the latest information on their stocks, the latest news headlines on certain topics, see if they have received new e-mail and get a weather forecast for the next few days. Birthdays within the next five days of all your relatives pop up and you are able to see if you're chat partners are online as well.

All this pops up automatically each time the customers enter their portal. From there on they are able to search for topics and use online services. Most probably they will use the search engine offered by the portal and use the online services proposed by the portal. Tracking the movement of the users gives feedback on the usability of the web site. Some pieces of information, for example, need to be put onto the home page, because 80 percent of the users want to know about it, but only 20 percent are able to find it. It also gives feedback on the preferences of the users (for example, all users go to the "Cars" zone). This in return helps the portal owner to enhance the services and the information flow. The major obstacle is to persuade the users to provide the required information to deliver the services onto their personalized homepage. See the section on "Privacy on the Internet" in Chapter 10 for more information on this topic.

6.4.6 Must-have Features for a Portal

Besides the search engine, e-mail and online chats are the most interesting things for Internet users. Offering free e-mails may seem to be superfluous, as everybody gets a free e-mail account with his or her online access, but there are several reasons why they would need additional e-mail accounts.

Firstly, it could well be that several family members share one account and want to send and receive private e-mails without paying for an extra account from their online service provider.

The second important reason to have e-mail accounts available through a web browser which do not require a special e-mail program, is that they are a very attractive offering for people who travel a lot. Just walk into the nearest Internet café or ask someone in an office with an Internet connection and within seconds you can read your mail and respond. Although web based e-mailing is far from being comfortable and fast, it offers a way to stay in touch while traveling and it is very simple to use. More advanced users may prefer to telnet to their accounts and use old fashioned Unix programs such as pine or elm to check their e-mail (or even telnet to the mail-server on port 110, which opens the standard pop3 connection).

Thirdly, people who are moving from one location to the other more often may not be able to move their e-mail account with them, because their online service provider is not available in the new location. Now having such a virtual e-mail address, they have one address that is valid for any location.

A fourth reason is to have fun accounts. MOE, for example, offers a wide range of different domain names, ranging from for-president.com to tweety-bird.com (my e-mail address could look like this danny@for-president.com or danny@tweety-bird.com). They are now in the process of becoming a portal as well, by adding new services such as web space (if we use my example I could receive the following URL, if desired: http://danny.for-president.com/) and weather reports.

Giving away free web space was what GeoCities offered in the first place. Now it is one of the largest portals on the Internet, offering everything from virtual communities, online shopping and a search engine to stock quotes and many other things.

Online chats are something really wonderful for telephone companies and online services that charge per minute or per hour. Once you have started a chat, it takes quite a while until you really get engaged in a conversation (unless you know the people already) and once you have started it is difficult to stop. Every portal offers online chats, ranging from Java-based chats to CGI chats. In-depth information on online chats can be found in Chapter 8.